|

|

|

|

|

|||

The power of a unifying viewBertrand MeyerA variant of this article appeared in Software Development Magazine, June 2001, as part of the "Beyond Objects" column alternatively written by Clemens Szyperski, Grady Booch and Bertrand Meyer.©Software Development Magazine, 2001. After it has divided, science unifies. Then it will divide again. Hoping to conquer, we divide. We distinguish. We classify. We split. Animal or vegetable? Wave or particle? Specification or implementation? Method or language? Object or component? Dividing is good, especially at the beginning. In a new field of study, a jumble of confusing facts and concepts confronts us; classification is our lifeline. Software engineering, as a serious discipline beyond the mere task of writing code, began this way: The early practitioners of the waterfall model taught us to distinguish between the tasks of understanding the problem, building the architecture, implementing the internals, validating the result and installing the product. With top-down functional decomposition, Harlan Mills and Niklaus Wirth showed how to split a program into a number of functions. Dijkstra, Hoare and Abrial taught us to consider the software separately from its mathematical specification. Liskov revealed that a data structure is just one implementation of an abstract data type. Without such distinctions, we'd never get anywhere. But once they have overcome the basic confusions, members of every scientific or technical field learn how to unify things by detecting profound similarities among phenomena hitherto considered unrelated. Light, heat, sound, X-rays, radio, infrared emissions and magnetism all appear in the end as facets of the same basic processes. Energy is matter in a different form; matter is potential energy. Sets of numbers, functions and geometric transformations are but special cases of the mathematical notion of group. People may or may not have a soul (I won't explore this particular issue further), but genetically, we're fruit flies with a few extra genes. After it has divided, science unifies. Then it will divide again. Not everything that was split should be rejoined. At the beginning of the computer era, the idea of treating algorithms and data identically seemed elegant, leading to self-modifying programs; we don't generally find such techniques very appealing today (except in weaker reincarnations, such as dynamic binding in object-oriented languages). Another counter-example, cited here at the risk of disappointing some of my Smalltalk friends, is the idea that classes and objects are the same. They are not. Although it's fruitful, through reflection libraries and meta-data, to define objects that represent classes, both the theory and the practice of OO development are easier if we retain a clear distinction between an instance and a class; between an object and its type. Yet another case of unification going too far in my opinion the attempt by Kristen Nygaard (the originator of Simula and OO programming) and Ole Lehrman Madsen, in their Beta programming language, to merge the notions of class, type, procedure, coroutine, exception and others into a single construct: the pattern. It's elegant, but removes useful distinctions. More generally, we will need to retain some divisions if we don't want to end up with a giant muddle. The question is what we should unify and what should remain separate. The Great UnifierOur field has much to gain from unification. "Mainframe development," "minicomputer applications" (anyone remember minicomputers?), "departmental computing" and "microcomputer programming" used to pass as different disciplines, but the boundaries have faded. For a long time, a non-pacific ocean kept the worlds of scientific computing and business data processing (the Fortran and Cobol crowds) safely apart, but today's scientific applications are all about databases, graphics and networking, while the people who build derivative trading and other banking applications speak of finite element methods, integration over meshes and mathematical software libraries. Both camps are increasingly influenced by the culture of the third original continent, "systems programming," and its fetish tool, C.

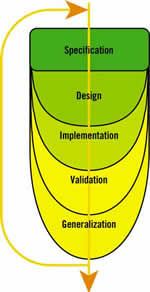

Object technology is the great unifier. Not for the muddled kind of unification (everything is in everything), since OO principles start with the critical distinction of data vs. function. But the principles also enable us, in the practice of large-scale enterprise development, to remove many unproductive and artificial divisions. An example is the strict separation - present in the waterfall model of the life cycle and even in most of its more flexible successors - between successive steps of analysis, design and so on. Good OO development provides a common framework for all these activities. Eiffel (the method with which I have been working) achieves this a with a common notation that handles all the activities of software development, from the most abstract to the most concrete, from the most user- and business-oriented (feasibility study and requirements analysis) to the most technical (debugging, deployment and maintenance). The process applied to each subsystem is not a waterfall or even a spiral, but a continuous loop (see figure 1). The limits of UMLBehind UML, I still see the waterfall-influenced view that programming is something done late in the process, using tools and notations (programming languages) meant only for implementation and hence removed from the nobler tasks of analysis and design. Eiffel embodies a different idea: OO tools and notations exist to help us think throughout the process. Object technology isn't simply about implementing code; it helps us understand problems, model systems, devise architectures and fill in details. So Eiffel isnít just a programming language competing with C++ or Java. It also exists in a more general realm that includes analysis and design tools. Eiffel's appreciate the ability to rely on a single set of concepts, tools and notations at all stages and levels of abstractions. The benefits of this unifying force are significant:

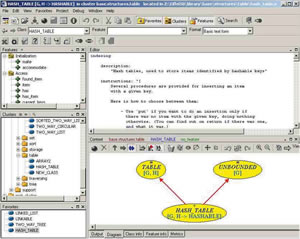

To understand how this approach differs from the more common use of a high-level graphical tool, usually UML-based, for analysis, and an unrelated programming language for implementation, see Figure 2, which shows the EiffelStudio Diagram view. This is simply a tab that displays a graphical representation of the current cluster's architecture. Modifying the class texts will update the view, and you can also work graphically on the diagram, if you prefer (adding classes, attributes, routines, inheritance relations and so on); changes will be immediately reflected in the text. This is not just "round-trip engineering" - it's "no-trip" engineering, which lets you manipulate a single product (again, the virtues of unification) through any of a number of available views. [Insert Figure 2 here.] One argument for the UML approach is that it lets you target your model to various languages. Some organizations may indeed prefer to keep the model and the implementations independent. Eiffel development is appropriate for those who are more attracted by the benefits of a unifying approach (which, at the same time, can integrate other programming languages and tools). Object Technology's PromiseThis concept of a single, reversible process takes the great promise of object technology to its logical conclusion. At a recent conference, Kristen Nygaard showed a copy of the first report on Simula, produced in the 1960s, with the title "a programming and description language,", showing that the technology's creators had envisioned this dual goal right from the beginning. Use of the same tools and notations for both modeling and implementation (as well as much else) yields some of the major benefits of well-applied OO development. Here is a specific case in which it's easy to miss the power of unity. A number of recent OO languages have a notion of interface, separate from classes. An interface has no implementation at all; itís made of features that are abstract or "deferred." A class is concrete; all of its features are implemented or "effective." Multiple inheritance, in some languages, is restricted to interfaces. The problem with this notion is that it renounces the generality of object techniques and their applicability throughout the life cycle; you do get that generality with a single notion of class covering the full spectrum from completely deferred (corresponding to interfaces) to completely effective. It is often good to start out with completely deferred classes and refine them, through inheritance or other means, until you get a complete implementation or, more generally, several implementations (as is possible if you use inheritance as your refinement mechanism). Intermediate classes in this process often use a very powerful pattern, central to the OO mode of development: include effective routines that call deferred ones. This could be, for example, an event processing loop that calls a number of routines in reaction to specific events (in a GUI, a Web interface or a database processing system); these routines will be specified only in descendants. Another example is a COMPARABLE class, which (as in the Eiffel basic libraries) describes objects ordered by a total order relation. The feature "less than" in that class should be deferred, but "less than or equal" should not, since we can express a <= b in terms of a < b and a = b. Similarly, "greater than" and "greater than or equal" should be effective and defined in terms of "less than." Absent this capability, COMPARABLE works as an interface, with everything deferred. But then every descendant must re-implement "less than or equal," "greater than," and "greater than or equal" in the same way, which is tedious, defeats reuse and may cause errors because class COMPARABLE has no way to force all descendants to use the same implementations. This artificial distinction between classes and interfaces iillustrates how much you can lose by introducing artificial distinctions and renouncing the essential unity that, beyond its appearance of diversity, characterizes the development process. With inheritance, too, I've found that it's more useful to unify than to separate. Because inheritance is such a multifaceted notion, it's tempting to devise separate mechanisms: one for module extension (sometimes pejoratively called implementation inheritance," but there.s nothing wrong with such a mechanism if used appropriately), another for subtyping and possibly another for delegation. My work on both software architecture and language design has led me to prefer a single, versatile mechanism that can serve all these purposes, keeping the architecture and the language simple. Components vs. ClassesThe debate on components vs. classes (or "objects") is yet another dispute that can be clarified with unitary thinking. Clemens Szyperski is right to argue for a delivery-and-deployment unit of higher granularity than the class [see Beyond Objects, Feb. 2000]. You don't usually deploy a class - you deploy a library, a package or an assembly. But the unit of reuse that seems to work best is a group of classes, which can be as small as one class. Microsoft's .NET, the latest of the component models, is a striking vindication of the view that classes are, in the end, the proper unit of discourse for reuse. Despite the difference between using a class as part of a completely controllable OO system and employing it as a general unit of reuse, I've found it more productive to make the notion of class powerful enough to handle as much as possible of what we need for component-based development. We progress by classification, but we may progress more by discovering commonalities. A sign of any discipline's maturity is its ability to resist the ever-present urge to split hairs More and more, as I try to understand object technology and practice it, I see it as an antidote to this constant temptation, and a powerful unifying factor in almost everything we do as we try to build the best software we can.

|